Overview

In this tutorial we will demonstrate how to integrate Realm’s Global Notifier with a machine learning service from IBM’s Watson Bluemix service.

This service will perform text and facial recognition processing on an image you provide and return to you descriptions of any faces found in the image as well any text that the machine learning algorithms find in that image.

The app we’re going to build is called Scanner. It is a very simple single-view application that allows users to take a picture (or select an image from the photo library) and get back detailed information about that picture identified by Watson. The application is implemented in 2 parts:

-

A mobile client that allows the user to take pictures with the phone’s camera; these images are synchronized with the remote Realm Object Server.

-

An event handler on a Realm Object Server that watches for new images taken by mobile clients, and then sends them to be analyzed by IBM’s Watson via the Bluemix service.

Scanner is implemented as a native app for both iOS and Android. The Github repo for the source code is provided at the end of this tutorial, but we encourage you to follow along as we construct the app in Swift (iOS) or Java (Android) code respectively.

The server side is implemented as a single JavaScript file which will be explained as we go along as well.

Prerequisites

In order to get up and running with this tutorial, you will need to have a few development tools setup, and you will need to sign up for a free API key for the IBM Bluemix service that does the image processing. All the prerequisites and setup steps are listed below:

For iOS, you will need Xcode 8.1 or higher and an active Apple developer account (in order to sign the application and run on real hardware); for Android you will need Android Studio version 2.2 or higher.

For the Realm Object Server, you will need a Macintosh running macOS 10.11 or higher, or any x86_64 glibc-based Linux distribution. Follow the installation instructions for the Professional or Enterprise Editions provided via email. You can sign up for a free 30-day trial of the Professional Edition here.

Make note of the admin user account & password you created when the Realm Object Server was registered - we will use this as the user for our demo application in this tutorial.

For access to IBM’s Watson visual recognition system it will be necessary to create a Bluemix trial account using the following URL: https://console.ng.bluemix.net/registration/. The steps require an active/verifiable email address and will result in the generation of an API key for the Watson components of the Bluemix services. The steps are summarized as follows:

-

Follow URL above to create an IBM Bluemix account

-

Receive verification email; click on link to verify

-

Log in to IBM Bluemix portal

-

Select a region - there are 3 choices: Sydney, United Kingdom and US South - pick the region that is closest to you.

-

Name your workspace - this name can be anything you like.

-

Click on the “I’m Ready” button to create the workspace

-

Once on the Apps page, click on the “hamburger menu” (upper left corner of the browser); click “Watson” to get to the Watson page

-

On the Watson page, click “Start with Watson”

-

On the Watson Services page, click on the “Analyze Images”, getting started item

-

At the top of the following page click on the “free” plan, then “Create Project”

-

Lastly click “service credentials” then “view credentials” and copy the JSON block that includes the api_key. This will be needed later in this tutorial to setup access for your application.

Starting the Object Server & Finding the AdminToken

Before we dive into this tutorial, it’s a good idea to have the Realm Object Server started and identify the Admin Token that will be needed in the implementation sections to follow.

If you downloaded the macOS version of the Realm Platform, follow the instructions in the download kit to start the server.

Once the server is running you will need a copy of the “admin token” which is displayed in the terminal window as part of the startup process. The admin token looks very much like this:

Your admin access token is: ewoJImlkZW50aXR5IjogImFkbWluIiwKCSJhY2Nlc3MiOiBbInVwb

G9hZCIsICJkb3dubG9hZCIsICJtYW5hZ2UiXQp9Cg==:A+UUfCEap6ikwX5nLB0mBcjHx1RVqBQjmYJ3j

BNP00xum65DT0tZV/oq2W8VIeuPiASXt3Ndn5TkahTU6a5UxQCnODfu0aGclgBuHmv5CKXwm4qr4bwL+

yd80WlRojdmYrYUf5jjbQjuBLUXkhyX554TjHOmANYzw1fv6sp1YXKDuKDkCHpH8+GIG5u0Xjp6IUK6F

tPtMbiPS1mMZ3YnxHm5BB2RQH3ywGxlsYLFnA9l4+Dc++sEQGWviYCCNBL9fD49zFPdvfBoc1WqsFi3P

KKcqyXfGdnyYucrDfo/4Rn8mT95lAqJGCcIRWwiNYKI805uHcI+JFv6/YXJB0wEMw==The token itself is all the text after the Your admin access token is: text, up to and including the trailing == characters.

If you’ve installed any of the Linux versions the software, the server will be automatically started for you and the admin token can be found in the file /etc/realm/admin_token.base64.

You will need this token for server sections of this tutorial.

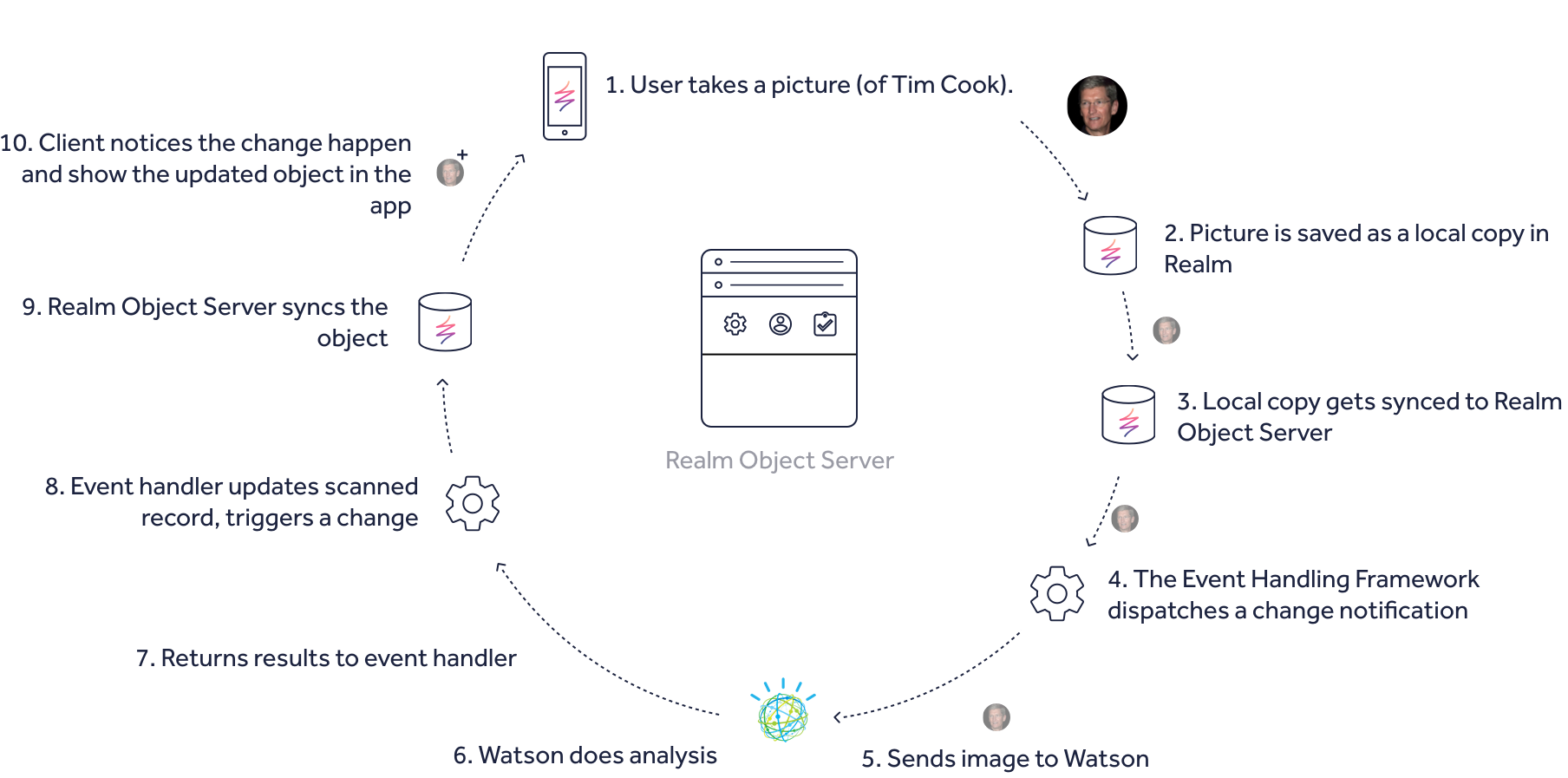

Architecture

The architecture of this client and server is quite simple:

There are 3 main components:

The Realm Mobile Clients

The clients is a small application that allows the user to take a picture and a Realm Database to store images and communicate with the Realm Object Server

The Realm Object Server

This is the part of the system that synchronizes data between mobile clients.

The Realm Global Notifier

This is the part of the Realm Object Server that can be programmed to respond to changes in a Realm database and perform actions based on those changes. In this case the action will be to interact with the IBM Bluemix recognition API using pictures captured by mobile clients and return any resulting text and descriptions of people (if any) in the image to the client Realms.

Operation

The operation of the system is equally simple:

Client devices take pictures that are stored in a Realm Database and synchronized with the Realm Object Server. An event listener on the Realm Object Server observes client Realms and notices when new pictures have been taken by client devices. The listener sends these images to be processed by the IBM Watson recognition service. When results come back, any scanned text is updated in the specific client’s Realm and these results are synchronized back to the respective mobile client where they can be viewed by the user.

Models & Realms

The cornerstone of information sharing in the Realm Platform is a shared model (or schema) between the clients and the server. Realm models are simple to define, yet cover all of the basic data types found in all programming languages (e.g., Booleans, Integers, Doubles, Floats, and Strings) as well as a few higher-order types such as collection types like Lists, Dates, a raw binary data type, etc.

More info on supported types and model definitions can be found in the Realm Database documentation; the version linked to here is for Apple’s Swift, but matching documents cover Java and other language bindings Realm supports.

There’s one model needed to support this text scanning app:

The “Scan” model which has a couple of string fields to hold the status returned by the Watson Bluemix image recognition service and a “scan id” to uniquely identify a new events (pictures taken), and a raw binary data type field to hold the bytes of the picture that is synchronized between the mobile device and the Realm Object Server.

Once a client starts up, this model is synchronized and exists both in the client and the server. The model is accessed as an named entity called a Realm. This example uses a Realm containing a single model but Realms can hold multiple models and, conversely a single app can access many Realms. For this example we’ll call the Realm scanner.

Realm Paths

On the client side – where a pictures is selected – there is a local on-device Realm called scanner. And, on the Realm Object Server there is one Realm per mobile device also called scanner - these contain the data that is synchronized from the mobile device to the server and back again as the objects, either added, deleted, or updated.

On the Object Server the scanner Realm exist in a hierarchy of Realms that are arranged much like a filesystem with a root (“/”), a user ID that is unique to each mobile user represented by a long string of numbers, a path separator (another “/” character) and then the name of the Realm that contains the models and their data. This is union of path + a user ID + realm-name is called the Realm Path and looks very much like a file system path or even like a URL.

To see how this looks in action - Let’s consider 2 hypothetical users of our Scanner app, their Realms on the object server might be written like this:

/12345467890/scanner

/9876543210/scannerThe Realm Object Server allows us to access Realms as URLs, so we actually refer to a synchronized Realm from inside a mobile app as follows:

realm://127.0.0.1:9080/~/scannerWhere the “realm://127.0.0.1:9080” represents the access scheme (“realm://”), the server IP address (or DNS hostname) and port number of the Realm Object server. The “~” (tilde) character is shorthand for “my user ID” and “scannner” is, as previously mentioned, the name of the Realm that contains our Scan model.

This concept of a Realm URL will become clearer as we implement the client and server sides of Scanner.

The Realm Global Notifier

The final concept, and the driving force behind this demo, is the Realm Global Notifier. This is the mechanism that allows the Object Server to respond to changes in the Realms it manages. Unlike Realm specific listeners, this API allows developers to listen for changes across Realms.

Global Notifiers are written in JavaScript and run in the context of a Node.js application and are written as a function to which 2 primary parameters are passed:

Change Event Callback - this specifies what is to be done once a change has been detected. In our case this will be calling the IBM Bluemix recognition API and processing any results that come back from the remote server.

Regex Pattern - this specifies which Realms on the server the listener applies to. In our case we will be listening to Realms that match “.*/scanner” or all the Realms created by each user of the Scanner app.

Here is an example of a change event callback:

var change_event_callback = function(change_object) {

// Called on when changes are made to any Realm which match the given regular expression

//

// The change_object has the following parameters:

// path: The path of the changed Realm

// realm: The changed realm

// oldRealm: The changed Realm at the old state before the changes were applied

// changes: The change indexes for all added, removed, and modified objects in the changed Realm.

// This object is a hashmap of object types to arrays of indexes for all changed objects:

// {

// object_type_1: {

// insertions: [indexes...],

// deletions: [indexes...],

// modifications: [indexes...]

// },

// object_type_2:

// ...

// }To register a Global Notifier you call this API:

Realm.Sync.addListener(server_url, admin_user, regex, change_callback) {};Notice that the remaining parameters provided include the URL of the Realm Object Server that contains the Realms and an admin user credential that uses the Admin Token we saw back when we started the Realm Object Server.

Don’t be concerned if this syntax isn’t familiar, these are examples within the larger framework of how a Realm Event is processed and will be shown in context as we implement the server side of the Scanner application.

With these concepts we have all we need to implement our Scanner.

Implementation

Building the Server Application

We will start with the Realm Object Server implementation first since it is needed regardless of which client application – iOS or Android – you choose to use. In order to continue we expect that following are true:

-

You have downloaded and installed a version of the Realm Platform Professional or Enterprise Edition.

-

You have successfully started the server and can access (copy) the Admin Token for your running server

-

You can log in to your Linux server or access the Mac on which the Realm Object Server is running.

-

You have obtained an API Key for the IBM Bluemix Watson service.

Creating the server side scripts.

Create a directory - You will need to create a new directory on your server (or in a convenient place on your Mac if running the Mac version) in which to place the server files. We are using the name ScannerServer which will include the Node.js package dependency file. Change into this directory and create/edit a file called package.json - this is a Node.js convention that is used to specify external package dependencies for a Node application as well as specifics about the application itself (its name, version number, etc). The contents should be:

{

"name": "Scanner",

"version": "0.0.1",

"description": "Use Realm Object Server's event-handling capabilities to react

to uploaded images and send them to Watson for image recognition.",

"main": "index.js",

"author": "Realm",

"dependencies": {

"realm": "^2.0.0",

"watson-developer-cloud": "^2.11.0"

}

}Notice that there are two dependencies for our server:

- The first is the Realm Object Server’s Node.js SDK, version 2.0 or later.

- The second is a Node.js module for the Watson service.

Both of these will be automatically downloaded for us by NPM, the Node.js package manager.

Once you have copied this into place, run the command:

npm installthis will download, unpack and configure all the modules.

In the same directory we will be creating a file called index.js which is the Node.js application that monitors the client Realms for changes and then reacts by sending images to the Watson Recognition API for processing

The file itself is listed in the code-box below, is several dozen lines long; we recommend you cut & paste the content into the index.js file you created. Several key pieces of information need to be edited in order for this application to function. Edit the index.js file and replace the REALM_ADMIN_TOKEN, BLUEMIX_API_KEY, REALM_ACCESS_TOKEN with the your admin token, the API key generated for you when you signed up for the IBM Bluemix trial, and the access token which came with your download of Realm Platform Professional or Enterprise Edition.

'use strict';

var fs = require('fs');

var Realm = require('realm');

var VisualRecognition = require('watson-developer-cloud/visual-recognition/v3');

// Insert the Realm admin token

// Linux: `cat /etc/realm/admin_token.base64`

// macOS (from within zip): `cat realm-object-server/admin_token.base64`

var REALM_ADMIN_TOKEN = "INSERT_YOUR_REALM_ADMIN_TOKEN";

// API KEY for IBM Bluemix Watson Visual Recognition

// Register for an API Key: https://console.ng.bluemix.net/registration/

var BLUEMIX_API_KEY = "INSERT_YOUR_API_KEY";

// The URL to the Realm Object Server

var SERVER_URL = 'realm://127.0.0.1:9080';

// The path used by the global notifier to listen for changes across all

// Realms that match.

var NOTIFIER_PATH = "^/.*/scanner$";

//Insert the Realm access token which came with your download of Realm Platform Professional Edition

Realm.Sync.setFeatureToken('INSERT_YOUR_REALM_ACCESS_TOKEN');

/*

Common status text strings

The mobile app listens for changes to the scan.status text value to update

it UI with the current state. These values must be the same in both this file

and the mobile client code.

*/

var kUploadingStatus = "Uploading";

var kProcessingStatus = "Processing";

var kFailedStatus = "Failed";

var kClassificationResultReady = "ClassificationResultReady";

var kTextScanResultReady = "TextScanResultReady";

var kFaceDetectionResultReady = "FaceDetectionResultReady";

var kCompletedStatus = "Completed";

// Setup IBM Bluemix SDK

var visual_recognition = new VisualRecognition({

api_key: BLUEMIX_API_KEY,

version_date: '2016-05-20'

});

/*

Utility Functions

Various functions to check the integrity of data.

*/

function isString(x) {

return x !== null && x !== undefined && x.constructor === String

}

function isNumber(x) {

return x !== null && x !== undefined && x.constructor === Number

}

function isBoolean(x) {

return x !== null && x !== undefined && x.constructor === Boolean

}

function isObject(x) {

return x !== null && x !== undefined && x.constructor === Object

}

function isArray(x) {

return x !== null && x !== undefined && x.constructor === Array

}

function isRealmObject(x) {

return x !== null && x !== undefined && x.constructor === Realm.Object

}

function isRealmList(x) {

return x !== null && x !== undefined && x.constructor === Realm.List

}

var change_notification_callback = function(change_event) {

let realm = change_event.realm;

let changes = change_event.changes.Scan;

let scanIndexes = changes.insertions;

console.log(changes);

// Get the scan object to processes

var scans = realm.objects("Scan");

for (var i = 0; i < scanIndexes.length; i++) {

let scanIndex = scanIndexes[i];

// Retrieve the scan object from the Realm with index

let scan = scans[scanIndex];

if (isRealmObject(scan)) {

if (scan.status == kUploadingStatus) {

console.log("New scan received: " + change_event.path);

console.log(JSON.stringify(scan))

realm.write(function() {

scan.status = kProcessingStatus;

});

try {

fs.unlinkSync("./subject.jpeg");

} catch (err) {

// ignore

}

var imageBytes = new Uint8Array(scan.imageData);

var imageBuffer = new Buffer(imageBytes);

fs.writeFileSync("./subject.jpeg", imageBuffer);

var params = {

images_file: fs.createReadStream('./subject.jpeg')

};

function errorReceived(err) {

console.log("Error: " + err);

realm.write(function() {

scan.status = kFailedStatus;

});

}

// recognize text

visual_recognition.recognizeText(params, function(err, res) {

if (err) {

errorReceived(err);

} else {

console.log("Visual Result: " + res);

var result = res.images[0];

var finalText = "";

if (result.text && result.text.length > 0) {

finalText = "**Text Scan Result**\n\n";

finalText += result.text;

}

console.log("Found Text: " + finalText);

realm.write(function() {

scan.textScanResult = finalText;

scan.status = kTextScanResultReady;

});

}

});

// classify image

/*{

"custom_classes": 0,

"images": [{

"classifiers": [{

"classes": [{

"class": "coffee",

"score": 0.900249,

"type_hierarchy": "/products/beverages/coffee"

}, {

"class": "cup",

"score": 0.645656,

"type_hierarchy": "/products/cup"

}, {

"class": "food",

"score": 0.524979

}],

"classifier_id": "default",

"name": "default"

}],

"image": "subject.jpeg"

}],

"images_processed": 1

}*/

visual_recognition.classify(params, function(err, res) {

if (err) {

errorReceived(err);

} else {

console.log("Classify Result: " + res);

var classes = res.images[0].classifiers[0].classes;

console.log(JSON.stringify(classes));

realm.write(function() {

var classificationResult = "";

if (classes.length > 0) {

classificationResult += "**Classification Result**\n\n";

}

for (var i = 0; i < classes.length; i++) {

var imageClass = classes[i];

classificationResult += "Class: " + imageClass.class + "\n";

classificationResult += "Score: " + imageClass.score + "\n";

if (imageClass.type_hierarchy) {

classificationResult += "Type: " + imageClass.type_hierarchy + "\n";

}

classificationResult += "\n";

}

scan.classificationResult = classificationResult;

scan.status = kClassificationResultReady;

});

}

});

// Detect Faces

visual_recognition.detectFaces(params, function(err, res) {

if (err) {

errorReceived(err);

} else {

console.log("Faces Result: " + res);

console.log(JSON.stringify(res));

realm.write(function() {

var faces = res.images[0].faces;

var faceDetectionResult = "";

if (faces.length > 0) {

faceDetectionResult = "**Face Detection Result**\n\n";

faceDetectionResult += "Number of faces detected: " + faces.length + "\n";

for (var i = 0; i < faces.length; i++) {

var face = faces[i];

faceDetectionResult += "Gender: " + face.gender.gender + ", Age: " + face.age.min + " - " + face.age.max;

faceDetectionResult += "\n";

}

}

scan.faceDetectionResult = faceDetectionResult;

scan.status = kFaceDetectionResultReady;

});

}

});

}

}

}

};

//Create the admin user

var admin_user = Realm.Sync.User.adminUser(REALM_ADMIN_TOKEN, SERVER_URL);

//Callback on Realm changes

Realm.Sync.addListener(SERVER_URL, admin_user, NOTIFIER_PATH, 'change', change_notification_callback);

console.log('Listening for Realm changes across: ' + NOTIFIER_PATH);

// End of index.jsRunning the Server Script

Once the admin token and API have been edited, run the Scanner server script with the command

node index.jsOnce the server starts, the server will be waiting for connections and changes from the mobile clients. Next we will create a iOS or Android simple app that uses this OCR service.

Building the Mobile Client

Completed Scanner Sources for iOS and Android

If you would like to download the completed projects for iOS and Android without working through the tutorial, they are available from Realm’s GitHub account:

https:/github.com/realm-demos/Scanner.git

Scanner for iOS

Prerequisites:

This project uses Cocoapods - to install cocoapods, use the command sudo gem install cocoapods this will ask you for an admin password. For more info on Cocoapods, please see http://cocoapods.org.

Part I - Create and configure a Scanner Project with Xcode

Open Xcode and Create a new, “Single View iOS application.” Name the application “Scanner”, choose “Swift” as the language, and save it to a convenient location on your drive.

-

Quit Xcode

-

Open a terminal window and change into the newly created Scanner directory; initialize the cocoapods system type running the command pod init - this will create a new Podfile template

-

Edit the Podfile (you can do this in Xcode or any text editor). After the line that reads

use_frameworks!, add the directive

pod 'RealmSwift'Save the changes to the file (and if necessary, quit Xcode once again).

From the terminal window run the command pod update this will cause CocoaPods to download and configure the RealmSwift module and create a new Xcode Workspace file that bundles together all of the external modules you’ll need to create the Scanner app.

Open the newly created Scanner.xcworkspace file - Use this workspace file instead of the standard Scanner.xcodeproj file.

Installing the sample image and icon - In order to allow you to run this tutorial in the simulator we are going to download two additional resources. The are linked below - download and unpack the zip file, and then drag each file, one at a time, onto your Xcode project window and allow Xcode to copy the resource into the project. Make sure to check the box “copy resource if needed”.

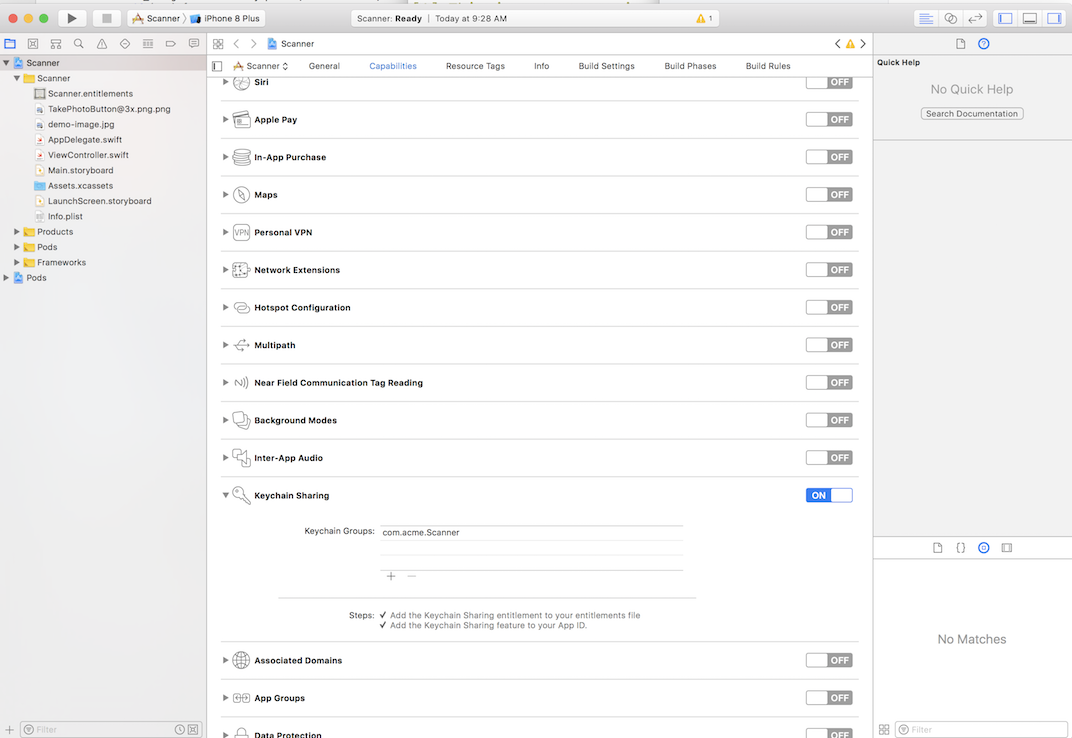

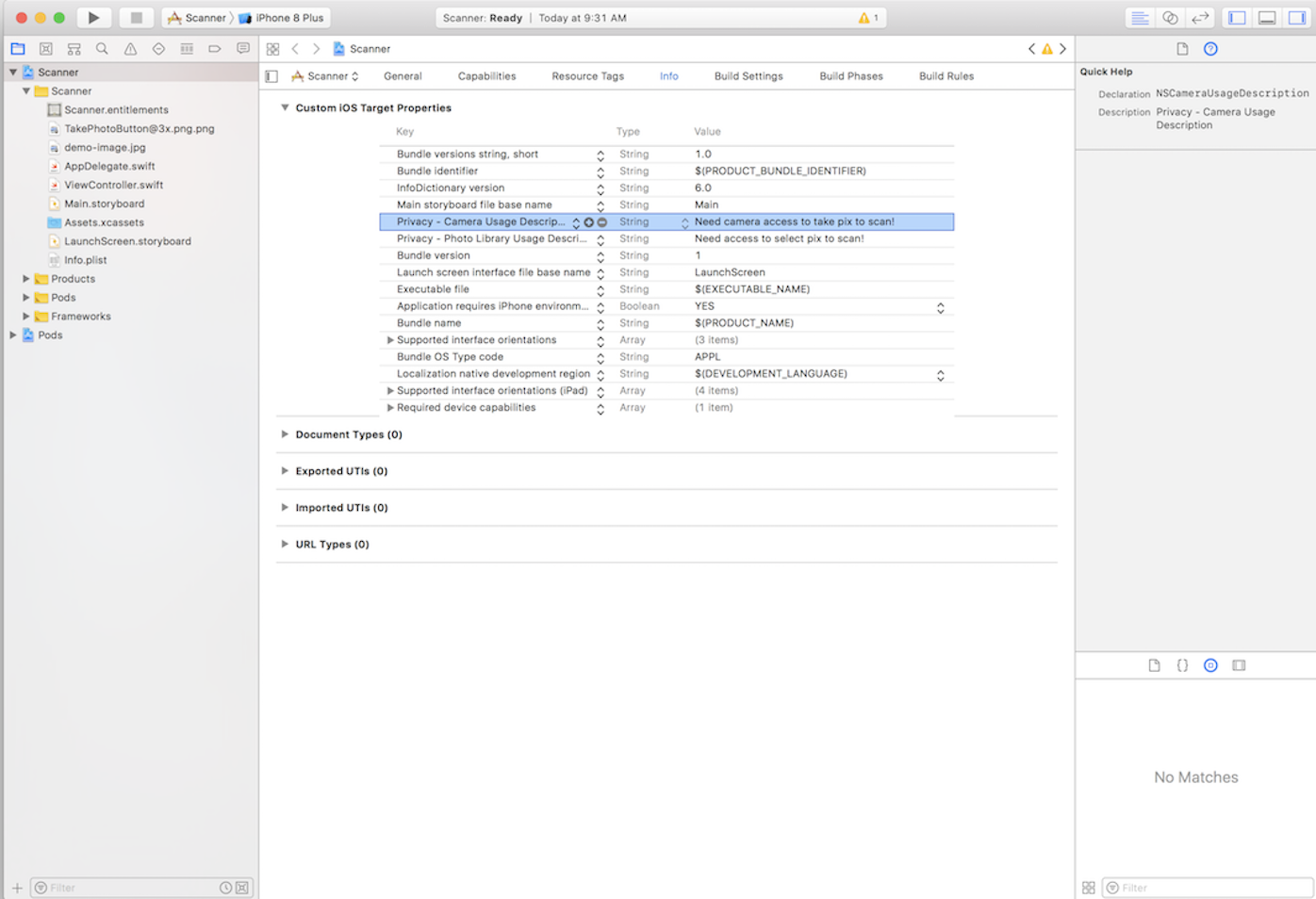

Setting the Application Entitlements - this app will need to enable keychain sharing and include a special key to allow access to the iPhone’s camera. Click on the Scanner project icon in the source browser and add/edit the following

-

In the Capabilities section set the Keychain Sharing to “on”

-

In the info section add 2 new keys to the Custom iOS Target properties: “Privacy - Photo Library Usage Description” and “Privacy - Camera Usage Description” These strings can be anything but are generally used to tell the user why the application needs access to the camera and photo library. When the app is run permission dialogs will be show using these strings when requesting this access.

-

In the general section see Application Signing - here you will need to select your team or profile; if you check “Automatically manage signing, XCode can manage the signing process for you (i.e., automatically set up any required provisioning profiles),

-

With the basic application settings out of the way, we are now ready to implement the app that will make use of the Realm Notifier application you finished previously.

Part II - Turning the Single View Template into the Scanner App

Adding a class extension to UIImage - we will need to add a file to our project with a couple of utility methods that let us easily resize image for display, and to encode them for storage in our Realm database.

Add a new Swift source file called UIImage+Encoding.swift to your project. It can be anywhere in your project’s folders but a convention is to put extensions either in a folder called “Extensions” or with the rest of the project’s implementation files. Add the following code to the file, and the save and close the window.

import Foundation

import UIKit

extension UIImage {

func resizeImage(_ image: UIImage, size: CGSize) -> UIImage {

UIGraphicsBeginImageContextWithOptions(size, false, 0.0)

image.draw(in: CGRect(origin: CGPoint.zero, size: size))

let resizedImage = UIGraphicsGetImageFromCurrentImageContext()

UIGraphicsEndImageContext()

return resizedImage!

}

func data() -> Data {

var imageData = UIImagePNGRepresentation(self)

// Resize the image if it exceeds the 2MB API limit

if (imageData?.count)! > 2097152 {

let oldSize = self.size

let newSize = CGSize(width: 800, height: oldSize.height / oldSize.width * 800)

let newImage = self.resizeImage(self, size: newSize)

imageData = UIImageJPEGRepresentation(newImage, 0.7)

}

return imageData!

}

func base64EncodedString() -> String {

let imageData = self.data()

let stringData = imageData.base64EncodedString(options: .endLineWithCarriageReturn)

return stringData

}

}Creating the Realm model. The model for the Scanner app is very simple; create another new Swift source file to your project, name this one Scan.swift. Copy and paste the text below into the file and save it.

import UIKit

import RealmSwift

class Scan: Object {

dynamic var scanId = ""

dynamic var status = ""

dynamic var textScanResult:String?

dynamic var classificationResult:String?

dynamic var faceDetectionResult:String?

dynamic var imageData: Data?

}Most of the fields should be self-explanatory. This model will be automatically instantiated on the local device, and then synchronized with Realm Object Server as you take/select pictures and tell the app to scan them.

-

Updating the View Controller - We are going to replace all of our template app’s boilerplate code with a very simple view that can load an image from the device’s photo library or camera and save this data which causes the object server to scan our sync’d images for text.

Our layout will be simple, yet functional, and when run will look very much like this:

It has 3 main areas: an image display area, a text area to show results, and a status buttons are to select/process an image and reset the app for a new image selection and show the current status of the image processing operation

- Adding in the View setup and display code - Open the ViewController and remove the

viewDidLoadanddidRecevieMemoryWarningmethods (make sure not to remove the final closing brace - this can lead to hard to debug errors).

Adopting the ImagePicker protocol - Near the top of the file - in the class declaration change UIViewController to “UIViewController, UIImagePickerControllerDelegate” this allows to use a picker view to select images; the updated class declaration will look like this:

class ViewController: UIViewController, UIImagePickerControllerDelegateNext, just after the class declaration, add the following code to declare the UI elements our ViewController will display and the Realm variables needed for the syncing and scanning process:

// UI Elements

let userImage = UIImageView()

let resultsTextView = UITextView()

let statusTextLabel = UILabel()

let scanButton = UIButton()

let resetButton = UIButton()

var imageLoaded = false

let backgroundImage = UIImage(named: "TakePhotoButton@3x")

//Realm variables

var realm: Realm?

var currentScan: Scan?Adding the ViewController lifecycle methods - these take care of setting up and updating the view as part of the application lifecycle (the final method hides the status bar to we can see our images more clearly)

override func viewDidLoad() {

super.viewDidLoad()

setupViewAndConstraints()

}

override func viewDidAppear(_ animated: Bool) {

updateUI()

}

override var prefersStatusBarHidden: Bool {

return true

}Adding the autolayout and view management methods - Near the bottom of the file we’ll add code that sets up and manages these elements. This is a pretty large function with a lot of code that isn’t really relevant to using the Realm Object Server - its job to is to set up all of the views/buttons/etc using autolayout and a couple of utility methods to handle view updates:

// MARK: View Setup and management

func setupViewAndConstraints() {

let allViews: [String : Any] = ["userImage": userImage, "resultsTextView": resultsTextView, "statusTextLabel": statusTextLabel, "scanButton": scanButton, "resetButton": resetButton]

var allConstraints = [NSLayoutConstraint]()

let metrics = ["imageHeight": self.view.bounds.width, "borderWidth": 10.0]

// all of our views are created by hand when the controller loads;

// make sure they are subviews of this ViewController, else they won't show up,

allViews.forEach { (k,v) in

self.view.addSubview(v as! UIView)

}

// an ImageView that will hold an image from the camers or photo library

userImage.translatesAutoresizingMaskIntoConstraints = false

userImage.contentMode = .scaleAspectFit

userImage.isHidden = false

userImage.isUserInteractionEnabled = false

userImage.backgroundColor = .lightGray

// a label to hold text (if any) found by the OCR service

resultsTextView.translatesAutoresizingMaskIntoConstraints = false

resultsTextView.isHidden = false

resultsTextView.alpha = 0.75

resultsTextView.isScrollEnabled = true

resultsTextView.showsVerticalScrollIndicator = true

resultsTextView.showsHorizontalScrollIndicator = true

resultsTextView.textColor = .black

resultsTextView.text = ""

resultsTextView.textAlignment = .left

resultsTextView.layer.borderWidth = 0.5

resultsTextView.layer.borderColor = UIColor.lightGray.cgColor

// the status label showing the state of the backend ROS Global Notifier or OCR API status

statusTextLabel.translatesAutoresizingMaskIntoConstraints = false

statusTextLabel.backgroundColor = .clear

statusTextLabel.isEnabled = true

statusTextLabel.textAlignment = .center

statusTextLabel.text = ""

// Button that starts the scan

scanButton.translatesAutoresizingMaskIntoConstraints = false

scanButton.backgroundColor = .darkGray

scanButton.isEnabled = true

scanButton.setTitle(NSLocalizedString("Tap to select an image...", comment: "select img"), for: .normal)

scanButton.addTarget(self, action: #selector(selectImagePressed(sender:)), for: .touchUpInside)

// Button to reset and pick a new image

resetButton.translatesAutoresizingMaskIntoConstraints = false

resetButton.backgroundColor = .purple

resetButton.isEnabled = true

resetButton.setTitle(NSLocalizedString("Reset", comment: "reset"), for: .normal)

resetButton.addTarget(self, action: #selector(resetButtonPressed(sender:)), for: .touchUpInside)

// Set up all the placement & constraints for the elements in this view

self.view.translatesAutoresizingMaskIntoConstraints = false

let verticalConstraints = NSLayoutConstraint.constraints(

withVisualFormat: "V:|-[userImage(imageHeight)]-[resultsTextView(>=100)]-[statusTextLabel(21)]-[scanButton(50)]-[resetButton(50)]-(borderWidth)-|",

options: [], metrics: metrics, views: allViews)

allConstraints += verticalConstraints

let userImageHConstraint = NSLayoutConstraint.constraints(

withVisualFormat: "H:|[userImage]|",

options: [],

metrics: metrics,

views: allViews)

allConstraints += userImageHConstraint

let resultsTextViewHConstraint = NSLayoutConstraint.constraints(

withVisualFormat: "H:|-[resultsTextView]-|", options: [],

metrics: metrics, views: allViews)

allConstraints += resultsTextViewHConstraint

let statusTextlabelHConstraint = NSLayoutConstraint.constraints(

withVisualFormat: "H:|-[statusTextLabel]-|",

options: [],

metrics: metrics,

views: allViews)

allConstraints += statusTextlabelHConstraint

let scanButtonHConstraint = NSLayoutConstraint.constraints(

withVisualFormat: "H:|-[scanButton]-|",

options: [],

metrics: metrics,

views: allViews)

allConstraints += scanButtonHConstraint

let resetButtonHConstraint = NSLayoutConstraint.constraints(

withVisualFormat: "H:|-[resetButton]-|",

options: [],

metrics: metrics,

views: allViews)

allConstraints += resetButtonHConstraint

self.view.addConstraints(allConstraints)

}

func updateImage(_ image: UIImage?) {

DispatchQueue.main.async( execute: {

self.userImage.image = image

self.imageLoaded = true

})

}

func updateUI(shouldReset: Bool = false){

DispatchQueue.main.async( execute: {

if (shouldReset == true && self.imageLoaded == true) || self.imageLoaded == false {

// here if just launched or the user has reset the app

self.userImage.image = self.backgroundImage

self.imageLoaded = false

} else {

// just update the UI with whatever we've got from the back end for the last scan

self.statusTextLabel.text = self.currentScan?.status

// NB: there's a chance that the currentScan has been nil'd out by a user reset;

// in this case just srt the text label to empty, otherwise we'll crash on a nil dereferrence

self.resultsTextView.text = [self.currentScan?.classificationResult, self.currentScan?.faceDetectionResult, self.currentScan?.textScanResult]

.flatMap({$0}).joined(separator:"\n\n")

}

})

}Lastly we will add the code that performs all of the Interactions with the Realm Object Server and the Global Notifier:

// MARK: Realm Interactions

func submitImageToRealm() {

SyncUser.logIn(with: .usernamePassword(username: "ds@realm.io", password: "cinnabar21"), server: URL(string: "http://\(kRealmObjectServerHost)")!, onCompletion: {

user, error in

DispatchQueue.main.async {

guard let user = user else {

let alertController = UIAlertController(title: NSLocalizedString("Error", comment: "Error"), message: error?.localizedDescription, preferredStyle: .alert)

alertController.addAction(UIAlertAction(title: NSLocalizedString("Try Again", comment: "Try Again"), style: .default, handler: { (action) in

self.submitImageToRealm()

}))

alertController.addAction(UIAlertAction(title: NSLocalizedString("Cancel", comment: "Cancel"), style: .cancel, handler: nil))

self.updateUI(shouldReset: true)

self.present(alertController, animated: true)

return

}

// Open Realm

let configuration = Realm.Configuration(

syncConfiguration: SyncConfiguration(user: user, realmURL: URL(string: "realm://\(kRealmObjectServerHost)/~/scanner")!))

self.realm = try! Realm(configuration: configuration)

// Prepare the scan object

self.prepareToScan()

self.currentScan?.imageData = self.userImage.image!.data()

self.saveScan()

}

})

}

func beginImageLookup() {

updateResetButton()

submitImageToRealm()

}

func prepareToScan() {

if let realm = currentScan?.realm {

try! realm.write {

realm.delete(currentScan!)

}

}

currentScan = Scan()

}

func saveScan() {

guard currentScan?.realm == nil else {

return

}

statusTextLabel.text = "Saving..."

try! realm?.write {

realm?.add(currentScan!)

currentScan?.status = Status.Uploading.rawValue

}

statusTextLabel.text = "Uploading..."

self.currentScan?.addObserver(self, forKeyPath: "status", options: .new, context: nil)

}

override func observeValue(forKeyPath keyPath: String?, of object: Any?,

change: [NSKeyValueChangeKey : Any]?, context: UnsafeMutableRawPointer?) {

guard keyPath == "status" && change?[NSKeyValueChangeKey.newKey] != nil else {

return

}

let currentStatus = Status(rawValue: change?[NSKeyValueChangeKey.newKey] as! String)!

switch currentStatus {

case .ClassificationResultReady, .TextScanResultReady, .FaceDetectionResultReady:

self.updateUI()

self.updateResetButton()

try! self.currentScan?.realm?.write {

self.currentScan?.status = Status.Completed.rawValue

}

case .Failed:

self.updateUI()

try! self.currentScan?.realm?.write {

realm?.delete(self.currentScan!)

}

self.currentScan = nil

case .Processing, .Completed:

self.updateUI()

default: return

}

}There are 4 key methods here that do the critical work:

-

prepareToScan()This method creates a new scan object; this is what will be synchronized with the the Realm Object Server -

submitImageToRealm()This is where the application authenticates with and logs into the “scanner” Realm. You will need to replace the “YOU USERNAME” and “YOUR PASSWORD” boilerplate with the admin username and password you used when you registered your copy of RMP/PE. -

saveScan()This method takes the image that was selected (and shown in the app) and converts it to a data format that can be synchronized and saves it in the Scan object created by prepareToScan(). Once the image is saved, the method sets an observer on the saved Scan object in order to watch for results from the Watson service that is being called by our ScannerServer using the Realm Global Sync Notifier. -

observeValueForkeyPath()This method isn’t specific to Realm but a feature of the Cocoa runtime (called Key-Value Observation or “KVO”) that allows observers to be registered to watch for changes in properties of objects and data structures. In this case, in the saveScan() method, we are asking the runtime to notify us when the status of a scan we’ve synchronized changes. When it does, the code reacts by changing the status labels and adding any returned results from the Watson service.

Putting it all together

At the end of the section on Building the Server Application, you created and started a small Node JS containing a Realm Global Sync Listener application that should be waiting for your iOS to connect up and sync images. Now all that’s left to do is fire up your Scanner app and see how this works.

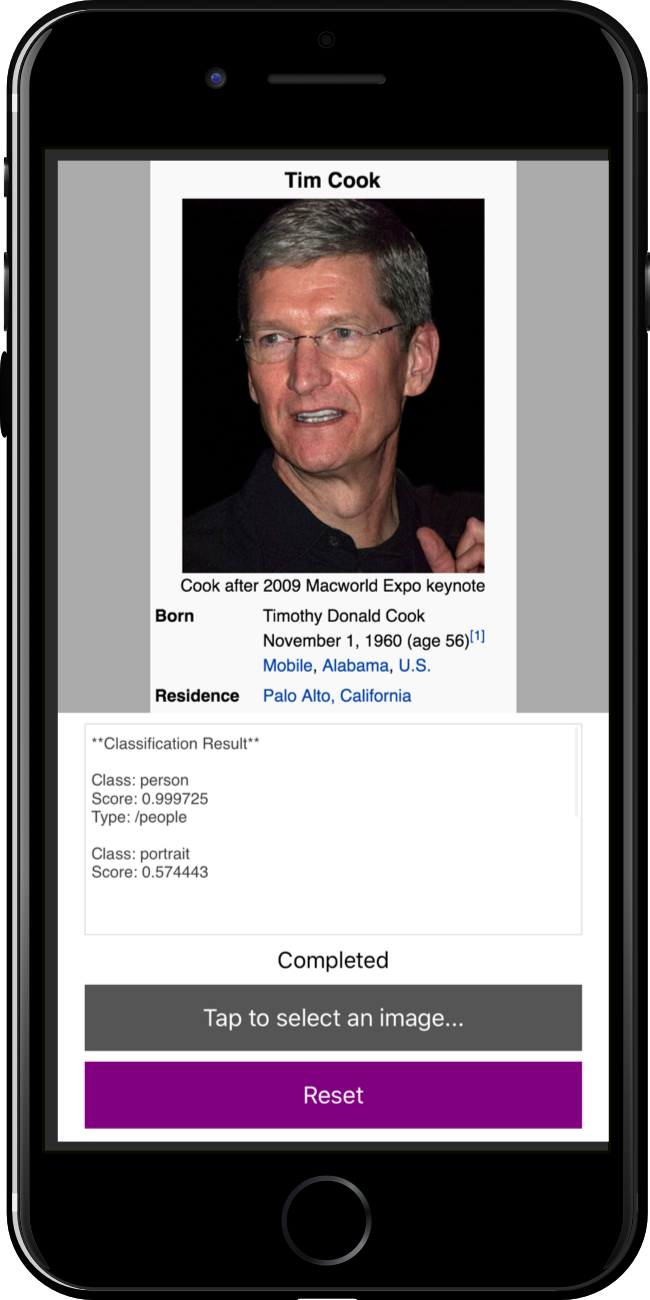

Running the app should be as simple as pressing Build/Run. Barring any typos or syntax errors, Xcode will build the Scanner app and run it in the iOS simulator. Once the app is running, tap the “Tap to select an image…” button, and then select “Choose from Library…”. This will cause the app to use the build in demo image we downloaded when we created the template application. The App should look very much like this:

Once the app has synchronized the image file with the Realm Object Server, the Global Sync Notifier application we created will send the image to IBM’s Watson service. After a moment the results that come back will be displayed in your app:

If you have an active Apple developer account you can run this on real hardware and try it with your own images.

Scanner for Android

Prerequisites: This project uses Android Studio. For more information on Android Studio, please see https://developer.android.com/studio/index.html

Part I - Create and configure a Scanner Project with Android

Open Android Studio and click on “Start a new Android Studio Project”. Name the application “Scanner”. “Company Domain” can be any domain name and “Project location” can be one of convenient locations on your drive. Click on the “Next” button.

The next window lets you select the form factors. Select “Phone and Tablet” and Click “Next”. You don’t need to modify “Minimum SDK” at this time..

The next screen lets you select an activity type to add to your app. Select “Empty Activity” and click “Next”. Because we are going to change layout, you don’t need to select a designed one.

Leave “Activity Name” as “MainActivity”, and also leave “Layout Name” as “activity_main”. Click “Finish” button to complete “Create New Project” wizard. In this tutorial, only one activity is used, and the name is not important.

You can find build script file which named “build.gradle” in two locations. One is on the project root, and the other is under the “app” directory. Modify project level “build.gradle” file on the project root to add Realm dependency, like below. As you see, we add “classpath ‘io.realm:realm-gradle-plugin:4.0.0’” in dependencies block of buildscript. You are now ready to use the Realm plug-in. If you are using higher version of Realm Java, please change the number of plug-in version.

// Top-level build file where you can add configuration options common to all sub-projects/modules.

buildscript {

repositories {

jcenter()

}

dependencies {

classpath 'com.android.tools.build:gradle:2.3.3'

classpath 'io.realm:realm-gradle-plugin:4.0.0'

}

}

allprojects {

repositories {

jcenter()

}

}

task clean(type: Delete) {

delete rootProject.buildDir

}Now, we are going to change build script of “app” level. First of all, add “apply plugin: ‘com.android.application’” to register Realm Java dependency.

apply plugin: 'com.android.application'

android {

compileSdkVersion 23

buildToolsVersion "25.0.1"

defaultConfig {

applicationId "example.io.realm.scanner"

minSdkVersion 15

targetSdkVersion 23

versionCode 1

versionName "1.0"

}

...Now, we are going to change build script of “app” level. First of all, add “apply plugin: ‘com.android.application’” to register Realm Java dependency. Second, change “compileSdkVersion” and “targetSdkVersion” to 23. Actually, we don’t need those changes for use of Realm Java, but for simple examples. Because the code to fetch after requesting a photo shoot has changed much difficult since API level 24.

dependencies {

...

compile 'com.android.support:appcompat-v7:25.3.1'

...

}Now, we need address of server. Add setting code for it in build script. Like below, we get address of localhost which we use for server in this test, and add it to “BuildConfig.OBJECT_SERVER_IP” constant. Items added as “buildConfigField” will be converted to BuildConfig which is a Java object at the time of building app, and added to app.

android {

...

buildTypes {

def host = InetAddress.getLocalHost().getCanonicalHostName()

debug {

buildConfigField "String", "OBJECT_SERVER_IP", "\"${host}\""

}

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android.txt'), 'proguard-rules.pro'

buildConfigField "String", "OBJECT_SERVER_IP", "\"${host}\""

}

}

}One last thing remains for settings. Add the following code at the end of the “app / build.gradle” file. With this code, we enable the synchronization feature of Realm Java. Without this option, synchronization is not available.

realm {

syncEnabled = true

}Part II - Register models and settings

Let’s start to build two models for scanner. One is “LabelScan”, and the other is “LabelScanResult”. When you fill “LabelScan” and pass it to server, the server fills the data in “LabelScanResult” to synchronize. Implement the first model, “LabelScan” as follows:

public class LabelScan extends RealmObject{

@Required

private String scanId;

@Required

private String status;

private LabelScanResult result;

private byte[] imageData;

public String getScanId() {

return scanId;

}

public void setScanId(String scanId) {

this.scanId = scanId;

}

public String getStatus() {

return status;

}

public void setStatus(String status) {

this.status = status;

}

public LabelScanResult getResult() {

return result;

}

public void setResult(LabelScanResult result) {

this.result = result;

}

public byte[] getImageData() {

return imageData;

}

public void setImageData(byte[] imageData) {

this.imageData = imageData;

}

}Now, implement “LabelScanResult” to hold the result.

public class LabelScanResult extends RealmObject {

private String textScanResult;

private String classificationResult;

private String faceDetectionResult;

public String getTextScanResult() {

return textScanResult;

}

public void setTextScanResult(String textScanResult) {

this.textScanResult = textScanResult;

}

public String getClassificationResult() {

return classificationResult;

}

public void setClassificationResult(String classificationResult) {

this.classificationResult = classificationResult;

}

public String getFaceDetectionResult() {

return faceDetectionResult;

}

public void setFaceDetectionResult(String faceDetectionResult) {

this.faceDetectionResult = faceDetectionResult;

}

}Now, extend “Application” to make “ScannerApplication”. We’ll add code for Realm Java initialization and enable verbose logging:

public class ScannerApplication extends Application {

@Override

public void onCreate() {

super.onCreate();

Realm.init(this);

RealmLog.setLevel(Log.VERBOSE);

}

}Modify “AndroidManifest.xml” to register “ScannerApplication” to “application” and add two settings for camera.

<manifest package="io.realm.scanner"

xmlns:android="http://schemas.android.com/apk/res/android">

<uses-feature

android:name="android.hardware.camera"

android:required="true"/>

<uses-permission

android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>

<application

android:name="io.realm.scanner.ScannerApplication"

…Part III - Create layout

Lets first take look at the whole layout file and look at the details.

<?xml version="1.0" encoding="utf-8"?>

<FrameLayout

android:id="@+id/activity_main"

xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context="io.realm.scanner.MainActivity">

<RelativeLayout

android:id="@+id/capture_panel"

android:layout_width="match_parent"

android:layout_height="match_parent">

<ImageButton

android:id="@+id/take_photo"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_centerHorizontal="true"

android:layout_marginTop="32dp"

android:background="#F9F9F9"

android:src="@drawable/take_photo"/>

<TextView

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_below="@id/take_photo"

android:layout_centerHorizontal="true"

android:layout_marginTop="32dp"

android:text="Select an Image to Begin"/>

</RelativeLayout>

<ScrollView

android:id="@+id/scanned_panel"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:visibility="gone">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical">

<ImageView

android:id="@+id/image"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:adjustViewBounds="true"

android:scaleType="fitCenter"/>

<TextView

android:id="@+id/description"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_below="@id/image"

android:layout_margin="16dp"/>

</LinearLayout>

</ScrollView>

<RelativeLayout

android:id="@+id/progress_panel"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:visibility="gone">

<ProgressBar

style="?android:attr/progressBarStyleLarge"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_centerInParent="true"

android:layout_margin="36dp"/>

</RelativeLayout>

</FrameLayout>You can see three layouts as children of “FrameLayout”. This includes “RelativeLayout”, “ScrollView”, and “RelativeLayout” in order. The first child, “RelativeLayout” is for view with camera button. The second child, “ScrollView” is a UI layout for the captured image and result. the last one, “RelativeLayout” includes “ProgressBar” for loading.

Part 4 - From single Activity to scanner application

Define constants for server connection in “MainActivity”.

private static final String REALM_URL = "realm://" + BuildConfig.OBJECT_SERVER_IP + ":9080/~/Scanner";

private static final String AUTH_URL = "http://" + BuildConfig.OBJECT_SERVER_IP + ":9080/auth";

private static final String ID = "scanner@realm.io";

private static final String PASSWORD = "password";We use a constant of random number for buffer size and “onActivityResult”. This constant is assigned a 1000th prime number to just designate it as a sufficiently large and unusual number.

private static final int REQUEST_SELECT_PHOTO = PRIME_NUMBER_1000th;We use two constants for “onActivityResult”. Those are used to send requests to other activities and to verify the destination when receiving data.

private static final int REQUEST_SELECT_PHOTO = PRIME_NUMBER_1000th;

private static final int REQUEST_IMAGE_CAPTURE = REQUEST_SELECT_PHOTO + 1;Now, let’s define fields in the activity.

private Realm realm;

private LabelScan currentLabelScan;

private ImageButton takePhoto;

private ImageView image;

private TextView description;

private View capturePanel;

private View scannedPanel;

private View progressPanel;

private String currentPhotoPath;

Additionally, define constants and enumurations for "MainActivity".

enum Panel {

CAPTURE, SCANNED, PROGRESS

}

class StatusLiteral {

public static final String UPLOADING = "Uploading";

public static final String FAILED = "Failed";

public static final String CLASSIFICATION_RESULT_READY = "ClassificationResultReady";

public static final String TEXTSCAN_RESULT_READY = "TextScanResultReady";

public static final String FACE_DETECTION_RESULT_READY = "FaceDetectionResultReady";

public static final String COMPLETED = "Completed";

}Add code for handling view in OnCreate method.

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

capturePanel = findViewById(R.id.capture_panel);

scannedPanel = findViewById(R.id.scanned_panel);

progressPanel = findViewById(R.id.progress_panel);

takePhoto = (ImageButton) findViewById(R.id.take_photo);

image = (ImageView) findViewById(R.id.image);

description = (TextView) findViewById(R.id.description);

takePhoto.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

if (realm != null) {

showCommandsDialog();

}

}

});

takePhoto.setVisibility(View.GONE);

takePhoto.setClickable(false);

showPanel(Panel.CAPTURE);

…

}Now, it’s time for creating “showCommandsDialog” method and “showPanel” method. “showCommandsDialog” contains “dispatchTakePicture” and “dispatchSelectPhoto” that connect to the camera and the gallery depending on the situation.

private void showCommandsDialog() {

final CharSequence[] items = {

"Take with Camera",

"Choose from Library"

};

final AlertDialog.Builder builder = new AlertDialog.Builder(this);

builder.setItems(items, new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialogInterface, int i) {

switch(i) {

case 0:

dispatchTakePicture();

break;

case 1:

dispatchSelectPhoto();

break;

}

}

});

builder.create().show();

}

private void showPanel(Panel panel) {

if (panel.equals(Panel.SCANNED)) {

capturePanel.setVisibility(View.GONE);

scannedPanel.setVisibility(View.VISIBLE);

progressPanel.setVisibility(View.GONE);

} else if (panel.equals(Panel.CAPTURE)) {

capturePanel.setVisibility(View.VISIBLE);

scannedPanel.setVisibility(View.GONE);

progressPanel.setVisibility(View.GONE);

} else if (panel.equals(Panel.PROGRESS)) {

capturePanel.setVisibility(View.GONE);

scannedPanel.setVisibility(View.GONE);

progressPanel.setVisibility(View.VISIBLE);

}

}Let’s take a look at “dispatchTakePicture” which opens camera, first. Following code uses “startActivityForResult” to request camera shoot through intent and to get the results back.

private void dispatchTakePicture() {

Intent takePictureIntent = new Intent(MediaStore.ACTION_IMAGE_CAPTURE);

if (takePictureIntent.resolveActivity(getPackageManager()) != null) {

File photoFile = null;

try {

photoFile = createImageFile();

} catch (IOException e) {

e.printStackTrace();

}

if (photoFile != null) {

currentPhotoPath = photoFile.getAbsolutePath();

Uri photoURI = Uri.fromFile(photoFile);

takePictureIntent.putExtra(MediaStore.EXTRA_OUTPUT, photoURI);

startActivityForResult(takePictureIntent, REQUEST_IMAGE_CAPTURE);

}

}

}

private File createImageFile() throws IOException {

String timeStamp = new SimpleDateFormat("yyyyMMdd_HHmmss").format(new Date());

String imageFileName = "JPEG_" + timeStamp + "_";

File storageDir = getExternalFilesDir(Environment.DIRECTORY_PICTURES);

File image = File.createTempFile(imageFileName, ".jpg", storageDir);

return image;

}Let’s create “dispatchSelectPhoto” which opens gallery. This method is much simpler than previous method because we don’t need to create a file.

private void dispatchSelectPhoto() {

Intent photoPickerIntent = new Intent(Intent.ACTION_PICK);

photoPickerIntent.setType("image/*");

startActivityForResult(photoPickerIntent, REQUEST_SELECT_PHOTO);

}Add code for synchronization with Realm object server below “onCreate” method. We use “SyncCredentials” to pass authentication information and set “SyncConfiguration” for opening a Realm instance using the previously declared constants. I will skip error handling to make a simple example.

final SyncCredentials syncCredentials = SyncCredentials.usernamePassword(ID, PASSWORD, false);

SyncUser.loginAsync(syncCredentials, AUTH_URL, new SyncUser.Callback<SyncUser>() {

@Override

public void onSuccess(@NonNull SyncUser user) {

final SyncConfiguration syncConfiguration = new SyncConfiguration.Builder(user, REALM_URL).build();

Realm.setDefaultConfiguration(syncConfiguration);

realm = Realm.getDefaultInstance();

takePhoto.setVisibility(View.VISIBLE);

takePhoto.setClickable(true);

}

@Override

public void onError(@NonNull ObjectServerError error) {

}

});Now, add code to close Realm.

@Override

protected void onDestroy() {

super.onDestroy();

cleanUpCurrentLabelScanIfNeeded();

if (realm != null) {

realm.close();

realm = null;

}

showPanel(Panel.CAPTURE);

}Add code for drawing menu at the top right according to the situation, like following:

@Override

public boolean onCreateOptionsMenu(Menu menu) {

getMenuInflater().inflate(R.menu.main, menu);

MenuItem item = menu.getItem(0);

item.setEnabled(false);

return true;

}

@Override

public boolean onPrepareOptionsMenu(Menu menu) {

final MenuItem item = menu.getItem(0);

if (currentLabelScan != null) {

final LabelScanResult scanResult = currentLabelScan.getResult();

if (scanResult != null) {

final String textScanResult = scanResult.getTextScanResult();

final String classificationResult = scanResult.getClassificationResult();

final String faceDetectionResult = scanResult.getFaceDetectionResult();

if (textScanResult != null && classificationResult != null && faceDetectionResult != null) {

item.setEnabled(true);

return true;

}

}

}

item.setEnabled(false);

return true;

}

@Override

public boolean onOptionsItemSelected(MenuItem item) {

if (item.getItemId() == R.id.refresh) {

setTitle(R.string.app_name);

cleanUpCurrentLabelScanIfNeeded();

showPanel(Panel.CAPTURE);

return true;

}

return false;

}

private void cleanUpCurrentLabelScanIfNeeded() {

if (currentLabelScan != null) {

currentLabelScan.removeAllChangeListeners();

realm.beginTransaction();

currentLabelScan.getResult().deleteFromRealm();

currentLabelScan.deleteFromRealm();

realm.commitTransaction();

currentLabelScan = null;

}

}Now, let’s create code for sending an image to server when user takes a picture or selects a photo from the gallery. “REQUEST_IMAGE_CAPTURE” is called when user takes a photo, or “REQUEST_SELECT_PHOTO” is called when user selects an image.

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

switch (requestCode) {

case REQUEST_SELECT_PHOTO:

if (resultCode == RESULT_OK) {

setTitle("Saving...");

final Uri imageUri = data.getData();

try {

final InputStream imageStream = getContentResolver().openInputStream(imageUri);

final byte[] readBytes = new byte[PRIME_NUMBER_1000th];

final ByteArrayOutputStream byteBuffer = new ByteArrayOutputStream();

int readLength;

while ((readLength = imageStream.read(readBytes)) != -1) {

byteBuffer.write(readBytes, 0, readLength);

}

cleanUpCurrentLabelScanIfNeeded();

byte[] imageData = byteBuffer.toByteArray();

if (imageData.length > IMAGE_LIMIT) {

BitmapFactory.Options options = new BitmapFactory.Options();

options.inJustDecodeBounds = true;

BitmapFactory.decodeByteArray(imageData, 0, imageData.length, options);

int outWidth = options.outWidth;

int outHeight = options.outHeight;

int inSampleSize = 1;

while (outWidth > 1600 || outHeight > 1600) {

inSampleSize *= 2;

outWidth /= 2;

outHeight /= 2;

}

options = new BitmapFactory.Options();

options.inSampleSize = inSampleSize;

final Bitmap bitmap = BitmapFactory.decodeByteArray(imageData, 0, imageData.length, options);

byteBuffer.reset();

bitmap.compress(Bitmap.CompressFormat.JPEG, 80, byteBuffer);

imageData = byteBuffer.toByteArray();

}

uploadImage(imageData);

} catch(FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

} finally {

showPanel(Panel.PROGRESS);

setTitle("Uploading...");

}

}

break;

case REQUEST_IMAGE_CAPTURE:

if (resultCode == RESULT_OK && currentPhotoPath != null) {

setTitle("Saving...");

BitmapFactory.Options options = new BitmapFactory.Options();

options.inJustDecodeBounds = true;

BitmapFactory.decodeFile(currentPhotoPath, options);

int outWidth = options.outWidth;

int outHeight = options.outHeight;

int inSampleSize = 1;

while (outWidth > 1600 || outHeight > 1600) {

inSampleSize *= 2;

outWidth /= 2;

outHeight /= 2;

}

options = new BitmapFactory.Options();

options.inSampleSize = inSampleSize;

Bitmap bitmap = BitmapFactory.decodeFile(currentPhotoPath, options);

final ByteArrayOutputStream byteBuffer = new ByteArrayOutputStream();

bitmap.compress(Bitmap.CompressFormat.JPEG, 80, byteBuffer);

byte[] imageData = byteBuffer.toByteArray();

uploadImage(imageData);

showPanel(Panel.PROGRESS);

setTitle("Uploading...");

}

break;

}

}Finally, make a code that processes an image and returns it on the server-side. This call-back method is registered by “currentLabelScan.addChangeListener(MainActivity.this);” of “uploadImage” method.

@Override

public void onChange(LabelScan labelScan) {

final String status = labelScan.getStatus();

if (status.equals(StatusLiteral.FAILED)) {

setTitle("Failed to Process");

cleanUpCurrentLabelScanIfNeeded();

showPanel(Panel.CAPTURE);

} else if (status.equals(StatusLiteral.CLASSIFICATION_RESULT_READY) ||

status.equals(StatusLiteral.TEXTSCAN_RESULT_READY) ||

status.equals(StatusLiteral.FACE_DETECTION_RESULT_READY)) {

showPanel(Panel.SCANNED);

final byte[] imageData = labelScan.getImageData();

final Bitmap bitmap = BitmapFactory.decodeByteArray(imageData, 0, imageData.length);

image.setImageBitmap(bitmap);

final LabelScanResult scanResult = labelScan.getResult();

final String textScanResult = scanResult.getTextScanResult();

final String classificationResult = scanResult.getClassificationResult();

final String faceDetectionResult = scanResult.getFaceDetectionResult();

StringBuilder stringBuilder = new StringBuilder();

boolean shouldAppendNewLine = false;

if (textScanResult != null) {

stringBuilder.append(textScanResult);

shouldAppendNewLine = true;

}

if (classificationResult != null) {

if (shouldAppendNewLine) {

stringBuilder.append("\n\n");

}

stringBuilder.append(classificationResult);

shouldAppendNewLine = true;

}

if (faceDetectionResult != null) {

if (shouldAppendNewLine) {

stringBuilder.append("\n\n");

}

stringBuilder.append(faceDetectionResult);

}

description.setText(stringBuilder.toString());

if (textScanResult != null && classificationResult != null && faceDetectionResult != null) {

realm.beginTransaction();

labelScan.setStatus(StatusLiteral.COMPLETED);

realm.commitTransaction();

}

} else {

setTitle(status);

}

invalidateOptionsMenu();

}Now, you’ve successfully created an Android app that takes a image or select it to recognize images through the Realm Object Server. Please refer to https://github.com/realm-demos/Scanner for the entire example.

If you want to learn more about Realm, try out our tutorial for the To Do List app.